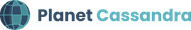

Learn how to scrape websites with Astra DB, Python, Selenium, Requests HTML, Celery, & FastAPI.

180 minutes • Advanced

Updated October 19, 2021

180 minutes, Advanced, Start Building

Learn how to scrape websites with Astra DB, Python, Selenium, Requests HTML, Celery, & FastAPI by following along with CodingEntrepreneurs' video, located here.

Quick Start

-

Signup for DataStax Astra, or login to your already existing account.

-

Create an Astra DB Database if you don't already have one.

-

Create an Astra DB Keyspace called

sag_python_scraperin your database. -

Generate an Application Token with the role of

Database Administratorfor the Organization that your Astra DB is in. -

Get your secure connect bundle from the connect page of your database and save it to your project folder. Rename it to

bundle.zip -

Setup your system: Below is a preflight checklist to ensure you system is fully setup to work with this course. All guides and setup can be found in the setup directory of this repo.

-

Install Selenium & Chromedriver - setup guide

-

Install Redis - setup guide

-

Create a virtual environment & install dependencies

-

Setup an account with DataStax

-

Create your first AstraDB and get API credentials

-

Use

cassandra-driverto verify your connection to AstraDB

-

How this works

Follow along in this video tutorial: https://youtu.be/NyDT3KkscSk.

This series is broken up into 4 parts:

- Scraping How to scrape and parse data from nearly any website with Selenium & Requests HTML.

- Data models how to store and validate data with

cassandra-driver,pydantic, and AstraDB. - Worker & Scheduling how to schedule periodic tasks (ie scraping) integrated with Redis & AstraDB

- Presentation How to combine the above steps in as robust web application service

Here's what each tool is used for:

- Python 3.9 download - programming the logic.

- AstraDB sign up - highly perfomant and scalable database service by DataStax. AstraDB is a Cassandra NoSQL Database. Cassandra is used by Netflix, Discord, Apple, and many others to handle astonding amounts of data.

- Selenium docs - an automated web browsing experience that allows:

- Run all web-browser actions through code

- Loads JavaScript heavy websites

- Can perform standard user interaction like clicks, form submits, logins, etc.

- Requests HTML docs - we're going to use this to parse an HTML document extracted from Selenium

- Celery docs - Celery providers worker processes that will allow us to schedule when we need to scrape websites. We'll be using redis as our task queue.

- FastAPI docs - as a web application framework to Display and monitor web scraping results from anywhere